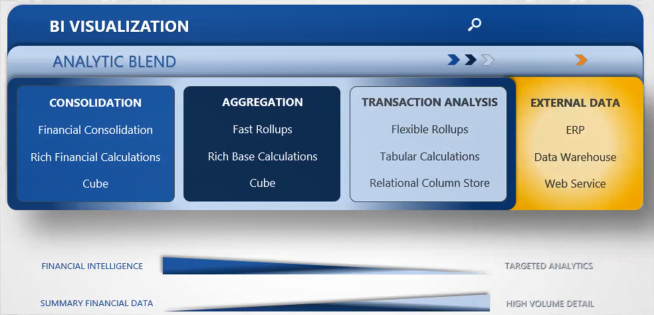

BI Blend Overview

BI Blend is a “read-only” aggregate storage model designed to support reporting on large volumes of data that is not appropriate to store in a traditional OneStream Cube. BI Blend data is large in volume and most often transactional in nature. As an example, to analyze data by invoice, a standard cube would require metadata to store the data records. In a short period of time, most all the invoice metadata would be unneeded because of the transactional nature of the data. Therefore, storage in a Cube design is not a best practice solution for transactional data.

A key challenge to report on Transactional data is to present it in a uniform format supporting standardized reporting yet be flexible enough to support ever changing records and reporting requirements. The overall large size of the data sets requires a model suitable for responsive reporting and analysis.

BI Blend is a solution that approaches these challenges in a unique and innovate way. It is a solution that rationalizes the source data for uniform and standardized reporting, much like the Standard OneStream Cube models, but stores the data in a new relational column store table for responsive reporting.

The BI Blend solution is intended to support analytics on large volumes of highly changing data, such as ERP system transaction data, which typically would not reside in a OneStream Cube. The processing is unencumbered from the intensive audit controls within a traditional Consolidation Cube, such as managing Calculation Status.

Key Elements of BI Blend

-

Flexible for change

-

Fast Aggregation (through data as Stored Relational Aggregation)

-

Single Reporting Currency translation

-

Leveraged OneStream Metadata, Reporting and Integration tools

-

Non-Cube, executed to a relational table optimized for reporting on large data sets by storing results in a column store index

Key Model Differences

Consolidation Model

Typically defined by requiring a high level of precision and transparency in the data. This is related to precision in the accuracy of the data at base and parent levels, as well as integrity/transparency across time to support audit requirements. Supports flexible options for data collection and load or source data, manual inputs and input through Journal entry.

-

Cube based

-

Known Data

-

Rich and complex financial calculations

-

Predictable, scheduled data population

-

Durable, sacred results

-

Structured, uniform reporting format

-

Financial Intelligence

-

Stored Complex Calculated Data

-

Parent level totals within Data Units are derived, such as Account subtotals.

Aggregation Model

The Aggregation Model is identified by an iterative process to populate the data. Reporting at the parent level Entities typically requires aggregated results, as opposed to the complex calculations, such as Eliminations, found in the Consolidation model. Supports flexible options for data collection and load or source data, manual inputs and input through Journal entry.

-

Cube based

-

Fast rollups

-

Iterative data population

-

Purpose driven results

-

Rich/complex calculations limited to base level Entities

-

Structured and Ad Hoc data exploration

Transaction Analytics Model

Data requires some level of aggregation but contains constantly changing information that should not be loaded to a cube. Contains quantities of information that are generally unknown and change regularly, such as project codes and invoices. Data is loaded and updated in bulk, no trickle feed or incremental loads.

-

Read Only Access

-

Very Large data sets, transactional in nature

-

Fast Aggregation as Stored Relational Aggregation

-

Flexible and changing records

-

Data may be related to Cube Data

-

Data populated and rebuilt on demand

-

Ancillary, supplemental data